Vsebina strani

The dark side of social media – Colloquium at the 22nd Sociolinguistics Symposium

30 June 2018, Auckland, New Zealand

Chair: Darja Fišer, University of Ljubljana and Jožef Stefan Institute, Slovenia

Co-chair: Vojko Gorjanc, University of Ljubljana, Slovenia

While intolerant, abusive and hate speech are not new phenomena, they have been limited to specific off-line and local contexts until the advent of new communication technologies the anonymity and instantaneity of which, coupled with its ever-growing importance as a source of information and communication, have given them an unprecedented boost and a global dimension. Because of this, new interdisciplinary theoretical and analytical methods and approaches are needed to improve our understanding of the shifting patterns of such practices in different parts of the world, with a particular focus on tackling its proliferation in the new media and radicalisation of online space in the contemporary, increasingly multicultural information society.

The aim of this colloquium is to give an overview of these phenomena as well as research and legal practices in Europe, USA, Japan and New Zealand in order to address the specific issues pertaining to the propagation of intolerant, abusive and hate content in the new media and to contribute to a better understanding thereof as well as to devise strategies for their containment and mitigation. The talks presented at the colloquium tackle a wide range of topics and on-line platforms, such as nationalist discourse on an on-line forum of white supremacy groups or explicitly and implicitly abusive discourse against the LGBTQ+ community of Twitter. The authors use a rich set of methodological frameworks, ranging from discourse-historical approaches to discourse studies to machine learning. The colloquium also offers a comprehensive legal analysis and recommendations for an improved treatment of hate speech in the new era from 10 EU countries as well as a contribution on hate speech that spills over the virtual world and enters the real-world streets.

TALK 1

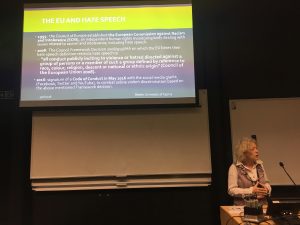

Hate speech: what definition? What solutions?

Fabienne Baider, University of Cyprus, Cyprus

On 31 May 2016, the Commission and main IT Companies made public a Code of conduct targeting illegal hate speech online. The parameters chosen by the Commission are grounded in the Council Framework Decision 2008/913/JHA of 2008. In this framework hate speech is confined to illegal speech defined as an open call to violence or hatred against specific groups, targeted because of their race, colour, religion, descent or national or ethnic origin. This study presents and analyses the results obtained within the EU program CONTACT focusing on online hate speech. In particular it evaluates the data from 10 countries within the EU. From these data we work out recommendation and a working definition of hate speech as ‘abusive speech, calling for violence or hatred or not, against specific groups of people because of their origins, religion, including as well gender or sexual orientation’. Some our conclusions include:

1. the necessity to use classification of hate speech based on prior studies carried out in the specific sociocultural context under study; so as to show how the EU definition may tone down the extent of illegal hate speech by using restrictive definition. Indeed we put forward the arguments for including legal hate speech in our data, a decision based on the reasoning that such speech may precede, accompany or provide the context for illegal hate speech;

2. the necessity to adopt a discourse analysis focused on specific and high frequency stereotypical words for specific groups and to avoid thinking of hate speech as the use of a common abusive languages for all groups but;

3. the need to take into account implicatures i.e. the implicit of dehumanizing metaphors or sarcasms. These implicatures do play role in escalating violence

4. The need for any automatic detection of illegal hate speech of contextualized research in the specific culture under study.

[slides]TALK 2

Bigotry online: Commenters responses to the ‘give nothing’ to racism campaign in New Zealand

Philippa Smith, Auckland University of Technology, Auckland, New Zealand

With a growing concern about the encroachment of ‘subtle’ racism (Ikuenobe, 2011) in New Zealanders’ daily behaviour, this nation’s Human Rights Commission, on 15 June 2017, sought to draw public attention to its pervasiveness by launching the ‘give nothing’ campaign. As part of this campaign the Commission engaged the New Zealander of the year – film director Taika Waititi – to front a satirical video which mimicked the style of a charity seeking support for a noble cause. In this case the irony was the request for New Zealanders to “give nothing to racism and the spread of intolerance” (HRC, 2017). The video sought to use humour to make people more conscious of their choice of words and actions that, while subtle, could be racially offensive.

Taking the view that satire, as a ‘discursive act’, is only successful if the satiree (whoever received the satire) accepts it (Simpson, 2003), this study examines 115 online comments to gauge whether the satire was accepted or not, and in what ways commenters responded. Using the ‘discourse-historical’ approach of critical discourse studies (Wodak & Reisigl, 2001; 2009), I review the video and the online comments to understand the linguistic features and discursive strategies that operate in a triangle of relationships between the satirist, the satiree and the target (Simpson, 2003). Findings showed that while some commenters happily supported the campaign, the majority of them used the online platform for trolling and overt expressions of racism which, in itself offered some irony as to the campaign’s concern with subtle racism.

References:

Human Rights Commission (2017). Give nothing to racism. Retrieved on 27 July, 2017 from https://www.hrc.co.nz/news/give-nothing-racism/

Ikuenobe, P. (2011). Conceptualizing racism and its subtle forms. Journal for the Theory of

Social Behaviour 41:2

Reisigl, M., & Wodak, R. (2001). Discourse and discriminaiton: Rhetorics of racism and antisemitism. London, England: Routledge.

Reisigl, M., & Wodak, R. (2009). The discourse-historical approach (DHA). In R. Wodak & M. Meyer (Eds.), Methods of critical discourse analysis (pp. 87–121). London, England: Sage Publications.

Simpson, P. (2003). ON the Discourse of Satire: Towards a Stylistic Model of Satirical Humour. Amsterdam and Philadelphia, PA: John Benjamins Publishing Co.

[slides]TALK 3

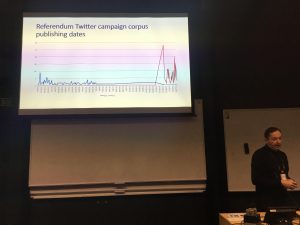

What Does Hate Look Like: Twitter Discourse on LGBTQ+ in Slovenia

Vojko Gorjanc, University of Ljubljana, Slovenia

Since Twitter, as one of the main social platforms, plays an important part (also) in forming gender and sexual identities, we decided to perform a corpus-based study on the Twitter discourse pertaining to the LGBTQ+ community in Slovenia. The corpus for our study was extracted from the Janes corpus of Slovene user-generated content, which contains almost 215 million tokens of Slovene blog posts and comments, forum posts, news comments, tweets, etc. The corpus is rich in annotated socio-demographic and linguistic metadata, such as account type, author’s gender and region as well as at the text level, such as text or sentiment. For the purpose of our study we extracted a sub-corpus of Twitter accounts of two key referendum campaign players who either supported or opposed the change of Slovenian legislation at the end of 2015, together with a) all tweets connected with both campaign with campaign hashtags and b) tweets with additional hashtags identified in previous context.

Our corpus analysis is quantitative and qualitative in its nature. The quantitative analysis with key words and sentiment analysis provides basic hints about the discourse used for the subsequent qualitative analysis. The qualitative approach is based on content analysis, followed by a critical discourse analysis exposing how one social group imposes power control over the other, and tries to limit the freedom of action of others, using the concepts of “normal” and “natural” as key concepts in forming gender and sexual identities.

We will show how persistent heteronormativity is—also in Twitter discourse; so much so that it seems natural, whereas any counterforce is seen as disruptive, and this is a key factor for abusive discourse against the LGBTQ+ community. Since the qualitative analysis has been conducted manually, we also focused on the non-verbal parts of discourse. Our analysis thus shows that images in posts are very powerful discourse elements, both when the text is explicitly abusive as well as in cases when the verbal parts of tweets remains neutral on the surface, with or without abusive subtext, however with a very explicit abusive non-verbal component.

TALK 4

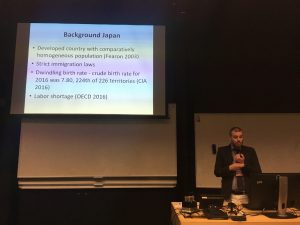

Hate Speech on Street Level in Japan – Interaction and Discourse between Hate Groups and Target Groups

Goran Vaage, Kobe College, Japan

Hate speech comes in many forms, both in terms of linguistic content and context. Furthermore, these two usually correlate in that hate speech from political organizations and other organized groups tends to centre around publicized covert racism, whereas at the other end of the spectrum, hate speech in computer mediated communication is often anonymous, derogatory and direct. Hate speech on street level, that is to say demonstrations, actual speeches, posters and such, falls between these two vertices, being semi-organized, taking place in the public space, but still being personal.

This talk analyses the interaction and discourse between hate groups and target groups on street level in Japan, based on a corpus gathered from fieldwork in the Kansai region of Japan in 2016 and 2017, other primary sources such as media coverage, and directions from stakeholder groups, for example banner wording recommendations.

The data suggests that the discourse from hate groups in Japan can be divided broadly into three types, ranging from discontent with current laws, welfare systems, and court decisions, urging members of target groups as well as the Japanese people to various measures, and more or less direct expressions of hate towards a certain target group or nation state. On the other hand, the discourse of the target groups and their campaigners, often outnumbering the hate groups, is more diverse, ranging from political counterarguments, explanations to by passers, offers of friendship, calls and protests against racism, to counterattacks on hate groups. The choice of message and discourse on both sides appears to be mostly a matter of personal choice, and even though the heat of the interaction can at times escalate, participants are in the public space where Japanese rules for social conduct still seem to apply, demonstrating that hate speech on street level is also a social act.

TALK 5

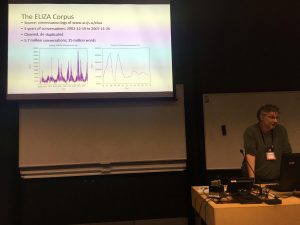

Chatbot abuse

Darja Fišer, Jožef Stefan Institute and University of Ljubljana, Slovenia

Tomaž Erjavec, Jožef Stefan Institute, Slovenia

Conversational agents, such as chatbots on the web, virtual assistants on smartphones and automated call handing systems are software agents which perform tasks or services for their users (Cassell 2000). As they are being introduced into everyday tasks and technologies, human-computer interaction is becoming an increasingly important research topic not only in computer science where researchers’ primary concern is how to create a machine that can hold a conversation, but also in communication studies and social sciences where the social role of conversational agents is only starting to be investigated. One of the most underresearched phenomena in human interaction with conversational agents is the abusive behaviour of the users towards the agent, which is much higher in both volume and intensity, than in human-human interaction (Bartneck et al. 2005).

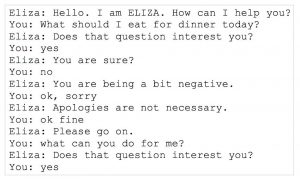

In this talk we present the results of a corpus analysis of the abusive language in conversations with the first on-line implementation of the first chatbot Eliza (Bradeško and Mladenić 2012). The chatbot, which was developed in 1966 and mimics the behaviour of a Rogerian psychologist, uses simple pattern matching and reformulates users’ sentences into questions (see Figure 2). It is freely available on-line for visitors to use as they like, there is no additional documentation, specified rules or foreseen goals apart from a short prompt that acts as a conversation starter (see Figure 1).

The corpus contains all the conversations anonymous users held with Eliza over a period of 5 years (between 2002-12-19 and 2007-11-26), amounting to a total of 5.7 million conversations or 22.1 billion words. The goal of the corpus analysis is to investigate abusive language in computer-mediated settings in general and to contribute towards a better understanding of linguistic manifestations of abusive behaviour of users when engaging with conversational agents, their triggers, functions and consequences.

Bartneck, C., Rosalia, C., Menges, R. and Deckers, I., 2005. Robot abuse – a limitation of the media equation. In Proceedings of the interact 2005 workshop on agent abuse, September 12 2005, Rome, Italy.

Bradeško, L. and Mladenić, D., 2012. A survey of chatbot systems through a Loebner prize competition. In Proceedings of Slovenian Language Technologies Society Eighth Conference of Language Technologies.

Cassell, J., 2000. Embodied conversational agents. MIT press.

[slides]TALK 6

Towards tackling hate online automatically

Darja Fišer, University of Ljubljana and Jožef Stefan Institute, Slovenia

Nikola Ljubešić, Jožef Stefan Institute, Slovenia

Tomaž Erjavec, Jožef Stefan Institute, Slovenia

With the unprecedented volume and speed of spreading information on social media, content providers, government institutions, non-governmental organizations and social media users alike are facing an increasingly daunting task to identify and respond to the spread of intolerant and abusive content in a timely and efficient fashion, before hateful content can cause substantial harm. To address this issue, we propose an automatized approach that employs state-of-the-art machine-learning techniques to identify and classify socially unacceptable online discourse practices (SUD).

In this talk we present the legal framework, annotation schema and dataset of SUD practices for Slovene as well as the prototype tool for automatic identification and classification of SUD on the Facebook pages of the most popular mass media in Slovenia that are being developed within the national basic research project FRENK (https://nl.ijs.si/frenk/english/).

We believe the annotation schema, dataset and tool are the key stepping stones towards the a comprehensive, interdisciplinary treatment of the linguistic, sociological, legal and technological dimensions of various forms of socially unacceptable discourse practices in Slovenia. From an applied perspective, the classifier has a big potential to be integrated into the daily work of moderators of discussions on the most popular forums and administrators of readers’ comments on the biggest online media sites who cannot cope with the volume of posts with manual methods and are finding simple, in-house-built lexicon methods insufficient.

On a more theoretical note, we hope that research will result in a thorough examination of the characteristics of socially unacceptable discourse as a linguistic phenomenon and the social context in which explicit or implicit forms of discriminatory language are manifested. What is more, these insights will facilitate an improved understanding of the differences between legally acceptable and unacceptable forms of communication.

[slides]